Markov Chains

Source

- https://brilliant.org/wiki/markov-chains/

- https://people.engr.tamu.edu/andreas-klappenecker/csce658-s18/markov_chains.pdf

- https://math.libretexts.org/Bookshelves/Applied_Mathematics/Applied_Finite_Mathematics_(Sekhon_and_Bloom)/10%3A_Markov_Chains/10.01%3A_Introduction_to_Markov_Chains

- https://www.probabilitycourse.com/chapter11/11_2_4_classification_of_states.php

Stochastic Process

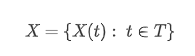

A stochastic process is a collection of random variables (source) and is defined as:

The index t usually represents time. We call X(t) the state of the process at time t. We will mainly choose T to be the set of nonnegative integers

Markov Chains Concept

“A Markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. The defining characteristic of a Markov chain is that no matter how the process arrived at its present state, the possible future states are fixed. In other words, the probability of transitioning to any particular state is dependent solely on the current state and time elapsed. The state space, or set of all possible states, can be anything: letters, numbers, weather conditions, baseball scores, or stock performances”.

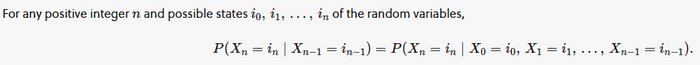

The Markov Property

Future state depends only on the current state and not on the states before, so the current state (at time t — 1) is sufficient to determine the probability of the next state (at time t). It can be written as:

Because Markov chains are “memory-less” (past states don’t matter), many real life examples don’t satisfy the Markov property

Time-homogeneous

A Markov chain is called homogeneous if the probability of any state transition is independent of time. Such a process may be visualized with a labeled directed graph, for which the sum of the labels of any vertex’s outgoing edges is 1 (as they represent probabilities).

We assume here that Markov chain are homogeneous.

State space

The State space (the set of all possible states) can be:

- discrete state space if the set of values of random variables (T) is infinite

- finite state space if the set of values of the random variables is a finite set such as

Graph representation

We can see below an example of a time-homogeneous Markov Chain. If we are in current state A, the probability that the future state will be B is 0.7 and the probability that the future state will be A is 0.3.

Transition matrix

A transition probabilities determine the Markov chain and is defined as:

A transition matrix P for Markov chain {X} at time t is a matrix containing information on the probability of transitioning between states

Each row in the matrix represents an initial state and each column represents a terminal state. Therefore the matrix must be a square matrix, with the same number of rows as columns)

Properties:

- P(i,j) is the probability that the chain moves from state i to state j. For example the entry in row 2 column 3 would show the probability that a bike that is initially at station B will be at station C one day later

- all entries are between 0 and 1 because they are probabilities

- each row of the matrix is a probability vector, and the sum of its entries is 1

Example of a transition matrix and the related graph:

Predicting future states

Let’s say we have a very simple bike share program with only 3 stations: A, B, C. Suppose that all bicycles must be returned to the station at the end of the day. Looking at the first row of the transition matrix that represents bikes initially at station A, we see that 30% of the bikes borrowed from station A are returned to station A, 50% end up at station B, and 20% end up at station C, after one day

To represent the distribution among the states at a particular point in time, we use a row matrix called a state vector. The entries show the distribution by state at a given point in time:

So at that particular moment (the initial state denoted by 0), 45% of the bicycles are in station B.

If we want to determine the distribution after one transition, we’ll need to find a new state vector that we’ll call V1. The subscript 1 indicates this is the distribution after 1 transition has occurred. We find V1 by multiplying V0 by the transition matrix T, as follows:

After 1 day (1 transition), 16 % of the bikes are at station A, 44.5 % are at station B and 39.5% are at station C.

Suppose now that we want to know the distribution of bicycles at the stations after two days. We need to find V2, the state vector after two transitions. To find V2 , we multiply the state vector after one transition V1 by the transition matrix T

T² can be seen as the probability of a bike being at a particular station after two transitions, given its initial station

- Entry t13 in row 1 column 3 tells us that a bike that is initially borrowed from station A has a probability of 0.37 of being in station C after two transitions

- Entry t32 in row 3 column 2 tells us that a bike that is initially borrowed from station C has a probability of 0. 19 of being in station B after two transitions

Therefore:

- T^n tells us the probability of a bike being at a particular station after n transitions

- if we multiply the initial state vector V0 by T^n, the resulting row matrix Vn=V0T^n is the distribution of bicycles after n transitions

Other properties

Irreducible and reducible

A Markov chain is known as irreducible if there exists a chain of steps between any two states that has positive probability. For example the below Markov chain is not irreducible because no other state can be reached from 2

Periodic and aperiodic

Considering the Markov chain below, to start from state 0 and come back to state 0 is only possible at times n = 3, 6, … So if n is not divisible by 3, the probability to come back to state 0 is 0. Such state is called a periodic state with period d(0) = 3

The period of a state i is the largest integer d where the probability to start and finish in state i in n times is equal to 0, whenever n is not divisible by d.

- if d(i) > 1, we say the state i is periodic

- if d(i) = 1, we say the state i is aperiodic

- a Markov chain is said to be aperiodic if all of its states are aperiodic