4 classification algorithms to deal with unbalanced datasets

Working on extremely unbalanced datasets can be frustrating as many algorithms are not well equipped to deal with this situation. As explained in the paper Exploratory Undersampling for Class-Imbalance Learning, “algorithms that do not consider class imbalance tend to be overwhelmed by the majority class and ignore the minority class” which can create poor results. There are two main approaches to deal with imbalanced data: Cost sensitive learning and sampling techniques. Cost sensitive learning techniques assign a high cost to misclassification of the minority class and try to minimize the overall cost. Sampling techniques can be divided into three categories, undersample, oversample and more complex methods like SMOTE. By reducing the majority class cases, undersample has the advantage to make the training time shorter but the downside is that you lose information related to the majority class in the process. On the other hand, because you are duplicating your minority class cases, oversample tends to overfit and make the training time longer.

We are going to check four classification algorithms which you can use with unbalanced datasets. Each of them use cost sensitive learning or sampling techniques to improve the modeling process. It is recommended to use them only when dealing with unbalanced datasets. The four algorithms are:

- Balanced Random Forest

- Easy Ensemble

- Balanced Cascade

- SMOTEBoost

I won’t go into the machine learning framework process when you deal with imbalanced data (that’s a story for another blog post); however, just remember that it is good practice to get a baseline (for example by training a log reg model on the untouched data — no sampling, no cost weight — so that you can compare the performance of each model you test. Also, I noticed that several papers (see the sources section at the end of the post) recommend using AUC as one of the possible performance metrics.

Note on using the imblearn package for python: one pitfall is that although you train on a transformed data (for example if you test undersampling), you have to make sure that the model is tested against an untouched dataset. In the case of cross validation, Sklearn doesn’t allow you to do this so you have to use the imblearn package and its pipeline functions instead.

Balanced Random Forest

In the Using Random Forest to Learn Imbalanced Data paper, Chao Chen, Andy Liaw and Leo Breiman propose two approaches based on the Random Forest algorithm to deal with extremely imbalanced datasets. One is based on sampling technique and the other one plays with cost weights. We will focus here on the sampling technique.

One of the issue with undersampling is that you lose information related to the majority class in the process. One way to deal with this is to mix undersampling and the ensemble concept. In the Balanced Random Forest algorithm, each time you create a new tree, you randomly undersample the majority class so that the number of minority class (taken from a bootstrap sample) and the number of majority class cases are equals. Hence, your tree will learn from a perfectly balanced dataset. As you iterate this process, new trees will learn from mostly different majority class cases, favoring diverse trees. We then aggregate the results, using majority for prediction. The full process is described below by the authors:

1. For each iteration in random forest, draw a bootstrap sample from the minority class. Randomly draw the same number of cases, with replacement, from the majority class.

2. Induce a classification tree from the data to maximum size, without pruning. The tree is induced with the CART algorithm, with the following modification: At each node, instead of searching through all variables for the optimal split, only search through a set of mtry randomly selected variables.

3. Repeat the two steps above for the number of times desired. Aggregate the predictions of the ensemble and make the final prediction.

Python code

You can use Balanced Random Forest algorithm in your projects with the imbalanced-learn library.

from sklearn.model_selection import cross_validate

from imblearn.ensemble import BalancedRandomForestClassifierclf = BalancedRandomForestClassifier()

scores = cross_validate(clf, X_train, y_train, cv=10, scoring="roc_auc")

scores["test_roc_auc"].mean()

Easy Ensemble

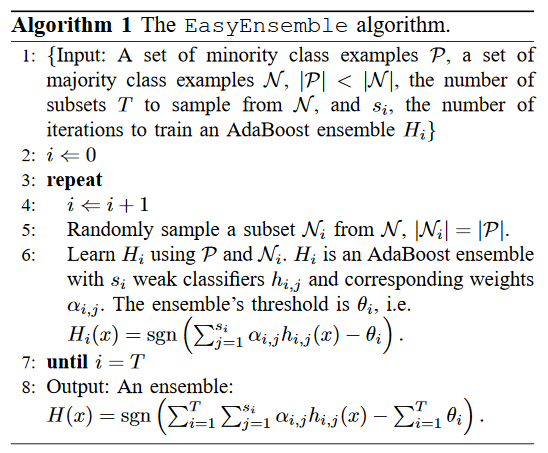

In the paper Exploratory Undersampling for Class-Imbalance Learning, the authors Xu-Ying Liu, Jianxin Wu and Zhi-Hua Zhou describe two algorithms to deal with imbalanced datasets. Let’s start with the first one: Easy Ensemble.

Similarly to the Balanced Random Forest algorithm, Easy Ensemble uses bootstrap samples to generate T balanced sub-problems; however, the Easy Ensemble algorithm is based on AdaBoost and “uses the samples to generate boosted ensembles while Balanced RF uses samples to train decision trees randomly”. As a result, “the ith sub-problem [of the Easy Ensemble] is an Adaboost classifier Hi, an ensemble Si with weak classifiers“. The final output “is a single ensemble, but it looks like an ‘ensemble of ensembles’”.

Quick recall on Adaboost as it never hurts (from Machine learning mastery: Bagging and Random Forest for Imbalanced Classification):

“AdaBoost works by first fitting a decision tree on the dataset, then determining the errors made by the tree and weighing the examples in the dataset by those errors so that more attention is paid to the misclassified examples and less to the correctly classified examples. A subsequent tree is then fit on the weighted dataset intended to correct the errors. The process is then repeated for a given number of decision trees.”

The step by step process of Easy Ensemble from the authors is described below:

Python code

Easy Ensemble is also available in the imbalanced-learn library. You can find the related documentation here.

from imblearn.ensemble import EasyEnsembleClassifier

from sklearn.model_selection import cross_validateeec = EasyEnsembleClassifier()

scores = cross_validate(eec, X_train, y_train, cv=10, scoring="roc_auc")

scores["test_roc_auc"].mean()

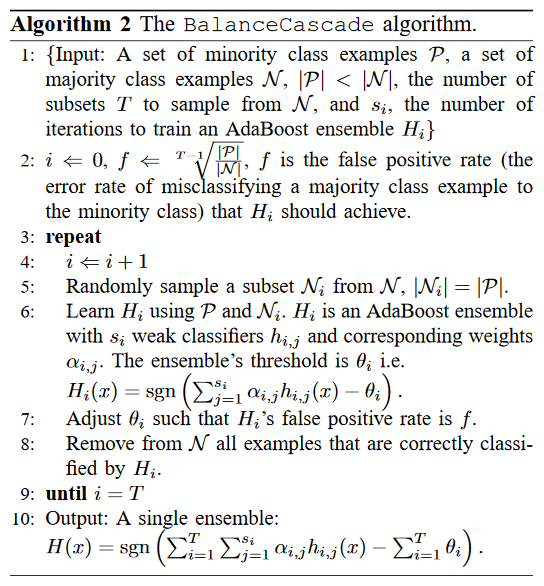

Balanced Cascade

As with Easy Ensemble, the algorithm is based on AdaBoost. The main idea of Balanced Cascade is to exclude examples from the majority class once they have been trained and correctly classified. As we create new Adaboost ensembles, the algorithm is trained on new majority class examples that it never previously saw (or never correctly guessed). Therefore, the majority class size decreases at each new ensemble’s creation iteration. Similarly to the algorithms we saw before, ensemble are trained on balanced classes.

“After H1 is trained, if an example x1 is correctly classified to be in the majority class by H1, it is reasonable to conjuncture that x1 is somewhat redundant in N, given that we already have H1. Thus we can remove some correctly classified majority class examples from N“.

The final prediction is not done on a majority vote but rather in a slightly different manner: “H(x) predicts positive if and only if all Hi(i=1,2,…,T) predict positive”.

You can find the algorithm’s description below (source)

Python code

It doesn’t look like this algorithm is easily available in Python or R. I found the following code, once again from the imbalanced-learn package. Example from the author:

>>> from collections import Counter

>>> from sklearn.datasets import make_classification

>>> from imblearn.ensemble import BalanceCascade

>>> X, y = make_classification(n_classes=2, class_sep=2,

... weights=[0.1, 0.9], n_informative=3, n_redundant=1, flip_y=0,

... n_features=20, n_clusters_per_class=1, n_samples=1000, random_state=10)

>>> print('Original dataset shape {}'.format(Counter(y)))

Original dataset shape Counter({1: 900, 0: 100})

>>> bc = BalanceCascade(random_state=42)

>>> X_res, y_res = bc.fit_sample(X, y)

>>> print('Resampled dataset shape {}'.format(Counter(y_res[0])))

Resampled dataset shape Counter({...})SMOTEBoost

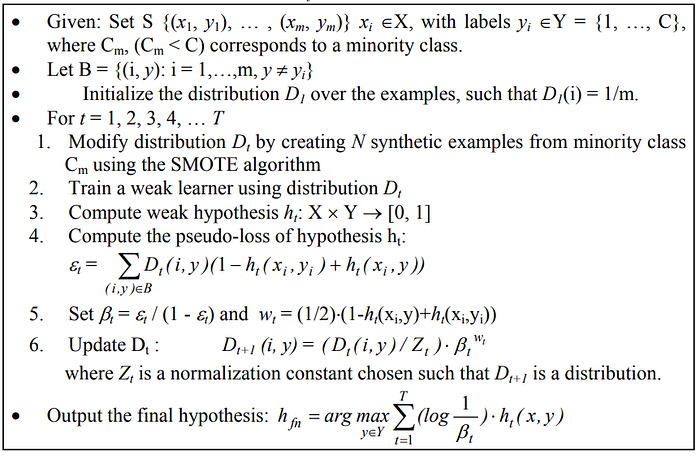

Another algorithm to try is SMOTEBoost. The concept is described in the SMOTEBoost: Improving Prediction of the Minority Class in Boosting paper. SMOTE is an oversampling technique and if you need to refresh your memory on what it does, you can check the article SMOTE explained for noobs or read the original paper (SMOTE: Synthetic Minority Over-sampling Technique). SMOTE will help us to “improve the accuracy over the minority classes” while we combine it with “boosting to maintain accuracy over the entire data set”. On a side note, it is good to remember that Boosting mainly reduces bias while Bagging tends to reduce variance. As the previous algorithm, SMOTEBoost is based on AdaBoost.

“Our goal is to better model the minority class in the data set, by providing the learner not only with the minority class instances that were misclassified in previous boosting iterations, but also with a broader representation of those instances, and with minimal accuracy of the majority class. We want to improve the overall accuracy of the ensemble by focusing on the difficult minority (positive) class cases, as we want to model this class better. The goal is to improve our True Positives (TP)”.

SMOTE is introduced in each round of boosting in order to increase the number of minority class samples. Based on this new distribution, we train a weak learner. After each boosting iteration, the error-estimate is calculated based on the original training set. Note that the amount of SMOTE is a key parameter that shouldn’t be guessed. The authors suggest using a validation set to discover what is the ideal number of SMOTE you should use.

Step by step description of the algorithm by the authors:

R code

You can use the ‘ebmc’ library in R (Cran documentation) to use SMOTEBoost.

# code taken from the ebmc guideline doc

data("iris")

iris <- iris[1:70, ]

iris$Species <- factor(iris$Species,

levels = c("setosa", "versicolor"),

labels = c("0", "1"))

model1 <- sbo(Species ~ ., data = iris, size = 10, over = 100, alg = "c50")Sources

- Using Random Forest to Learn Imbalanced Data

- Exploratory Undersampling for Class-Imbalance Learning

- Addressing the curse of Imbalanced Training Sets: One-sided Selection

- Data mining for imbalanced datasets

- Imblearn user guide

- SMOTE explained for noobs

- SMOTE: Synthetic Minority Over-sampling Technique

- SMOTEBoost: Improving Prediction of the Minority Class in Boosting

- Applied Machine Learning 2019 — Lecture 11 — Imbalanced data by Andreas Mueller

- Machine learning mastery: Tour of Evaluation Metrics for Imbalanced Classification

- Machine learning mastery: Bagging and Random Forest for Imbalanced Classification

- R embc package documentation